If you are familiar with theory of computation, perhaps you have caught yourself thinking of the relationship between NP and P as analogous to the relationship between RE and R.

That is, nondeterministic polynomial-time is to polynomial-time as Turing-recognizable is to Turing-decidable.

I certainly have thought this, and I think it’s because I visualize NP as a “positive” extension of P, with counterpart coNP,

just as RE is a “positive” extension of R with counterpart coRE.

On the face of it, this rather seems like a misconception.

NP is formed from P by introducing nondeterminism, whereas RE is formed from R by introducing

non-halting behavior, and these are quite different concepts.

In particular, if we add non-halting behavior to poly-time TMs, by requiring only that

the TM runs in poly-time if it halts at all, we still only obtain the class of languages P.

And on the other hand, non-deterministic TMs are equivalent to deterministic TMs (see e.g. Sipser 2nd edition, Theorem 3.16), so adding non-determinism to decider TMs we expect to remain at the class of languages R.

Nevertheless, this ignored StackOverflow answer claims a very close analogy in terms of verifiers, where just as NP is the class of languages with polynomial-time verifiers, RE is the class of languages which have verifiers at all.

In fact this is true, but only if we allow the runtime of the verifier to be unbounded in the length of the original input, which seems like cheating considering that the runtime of a poly-time verifier must be uniformly bounded by a poly-time function in the length of the original input.

At the core of the problem is the definition of runtime and halting / non-halting for nondeterministic and verifier TMs.

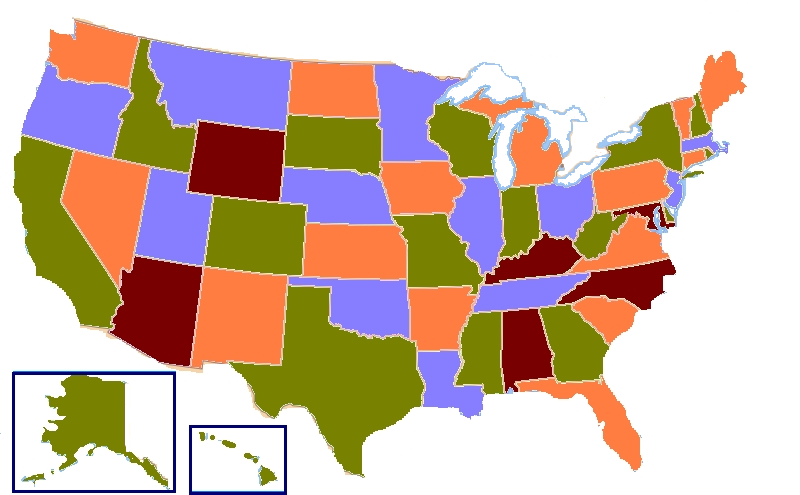

I summarize the discussion with the following table:

| Model |

Polynomial bound |

Finite bound |

Halting |

No bound |

| TM | P | R | R | RE |

| Nondet. TM | NP | R | R | RE |

| Verifier TM | NP | R | RE | RE |

Given this, I think the correspondence between P : NP and R : RE is essentially a fluke,

only present if we use verifiers rather than NTMs and if we only require that they halt,

not that their runtime has a finite bound.

The rest of this post just elaborates on and explains the above table.

Non-determinism and verifiers, in general

It is perhaps clearer to think of these concepts in the most general setting.

Therefore, let us suppose that a model of computation

begins with a collection of machines, a collection of possible inputs,

and a collection of possible configurations.

For any machine \(M\) and input \(w\), there is an initial configuration —

e.g. for TMs it would be the tuple of the machine \(M\), the initial state \(q_0\), and the initial tape containing \(w\).

Then we have a step function (or “small-step semantics”) which maps any configuration to a new configuration,

taking one unit of time.

Lastly there are some final configurations, which do not step.

The evaluation of \(M\) on some input \(w\) (i.e., the “big-step semantics”)

is the final configuration that is ultimately produced by a sequence of small-steps, if it exists.

Moreover, let’s simplify this so that the output is binary, by saying that all final configurations either accept or reject.

A nondeterministic model of computation is the same but the small-step semantics can map a configuration to multiple possible configurations.

In this case, \(M\) accepts input \(w\) if it is possible to arrive at an accepting configuration.

(A discussion for another day is whether there are other reasonable definitions of the language of an NTM. Since there are many possible final configurations, we clearly need some way to aggregate all the configurations into one, and so it is natural to define some outputs to override others. In the standard definition we take accepting to override rejecting. But we could also take, for instance, non-halting behavior to override accepting!)

A verifier model of computation consists of an underlying model of computation, in which inputs are ordered pairs. A verifier machine \(\text{ver}(M)\) starts by mapping the input \(x\) to a new input \((x,w)\), where \(w\) is the witness, feeding that to \(M\). We formally say that \(\text{ver}(M)\) accepts \(x\) if there exists a witness \(w\) for which \(M\) accepts \((x,w)\).

Runtime bounds for non-deterministic and verifier TMs

To obtain \(NP\) from \(P\), we have to understand how to bound the runtime of a verifier (VTM) or nondeterministic TM (NTM) by a polynomial.

In general, the runtime of a verifier or NTM must be a notion that does not depend on the particular

branch executed, or the particular witness string.

If \(f(n)\) is some general function of \(n\), we can say that an NTM’s runtime is bounded by \(f(n)\) if, on any input of size \(n\), there is no branch with length longer than \(f(n)\) (including non-halting branches).

A VTM’s runtime is bounded by \(f(n)\) if, on any input of size \(n\) and any witness,

the runtime is bounded by \(f(n)\).

Using this definition, if we require \(f\) to be a polynomial, then NTMs and VTMs both give us NP.

And then if we allow \(f\) to return any finite value (so, the runtime has to be bounded by a fixed function of the input),

then both NTMs and VTMs give us R.

The constant bound on the runtime means that we can simply try all possible branches

or all possible witnesses with a deterministic TM, and that TM will always halt.

But the difference between NTMs and VTMs arises if we formally require the strict bound \(f(n) = \infty\). What this is saying is that all branches of the NTM are halting, and that the VTM halts on every input, witness pair. For NTMs, we can still simulate this with a deterministic TM, because if all branches of an NTM are halting, then we can try all of them and we will eventually halt.

But for a VTM, we cannot try all witnesses, because there are infinitely many! It does not really matter that the VTM

halts on each individual one.

With a VTM, requiring the TM to always halt is no barrier to us checking a Turing-recognizable but not decidable property, because we get an existential quantification over all finite strings (an infinite set) for free.

So we can say that RE is the set of languages recognized by a verifier

TM which always halts.

But this is somewhat dubious because maybe the “right” definition of the runtime of a verifier TM is the supremum over all witnesses, in which case a verifier for a language in RE but not in R will have runtime \(\infty\).

And maybe the right definition of a halting verifier is one whose runtime is finite.

Under this definition, the subtle difference between NTMs and VTMs, highlighted in the original table, would disappear.

References

My definition of a decider / halting NTM does agree with Sipser 2nd edition, page 152, where he requires that a decider NTM halt on all branches. Sipser page 255 only defines the runtime for decider NTMs.

This CS StackExchange thread also gives several different definitions of a nondeterministic decider, which are all equivalent and recognize R.

As for verifiers, on Sipser page 265 it is suggested (but only really for the polynomial case) that the runtime for verifiers should be the supremum over all witnesses.

In descriptive complexity, Fagin’s theorem

shows that NP arises from P

essentially by allowing existentially-quantified second-order variables.

On the other hand, RE arises from R by allowing existential quantification over \(\mathbb{N}\).

This old CS StackExchange question seems to me a great question which is asking what I asked, but its answer does not really say anything.

This post in hebrew might be mildly relevant but I can’t read it.